Substrate Recipes 🍴😋🍴

A Hands-On Cookbook for Aspiring Blockchain Chefs

Substrate Recipes is a cookbook of working examples that demonstrate best practices when building blockchains with Substrate. Each recipe contains complete working code as well as a detailed writeup explaining the code.

How to Use This Book

You can read this book in any particular order. If you have a certain topic you want to dive into, or know the subject/keyword to look for, please use the search button (the small magnifier on top left) to search for the subject. The list is organized roughly in order of increasing complexity.

You can't learn to build blockchains by reading alone. As you work through the recipes, practice compiling, testing, and hacking on each Recipes. Play with the code, extract patterns, and apply them to a problem that you want to solve!

If you haven't already, you should probably clone this repository right now.

git clone https://github.com/substrate-developer-hub/recipes.git

Getting Help

When learning any new skill, you will inevitably get stuck at some point. When you do get stuck you can seek help in several ways:

- Ask a question on Stack Overflow

- Ask a question in the Substrate Technical Element channel

- Open a new issue against this repository

Prerequisites

Each recipe targets a specific aspect of Substrate development and explains the details of that aspect. In all recipes some basic familiarity with Substrate development and a working Rust environment are assumed. Generally speaking you should meet the following prerequisites:

- Have a working Substrate development environment. There are excellent docs on setting up a Substrate development environment.

- Understand the first ten chapters of The Rust Book. Rather than learning Rust before you learn Substrate, consider learning Rust as you learn Substrate.

- Complete the first few Official Substrate Tutorials.

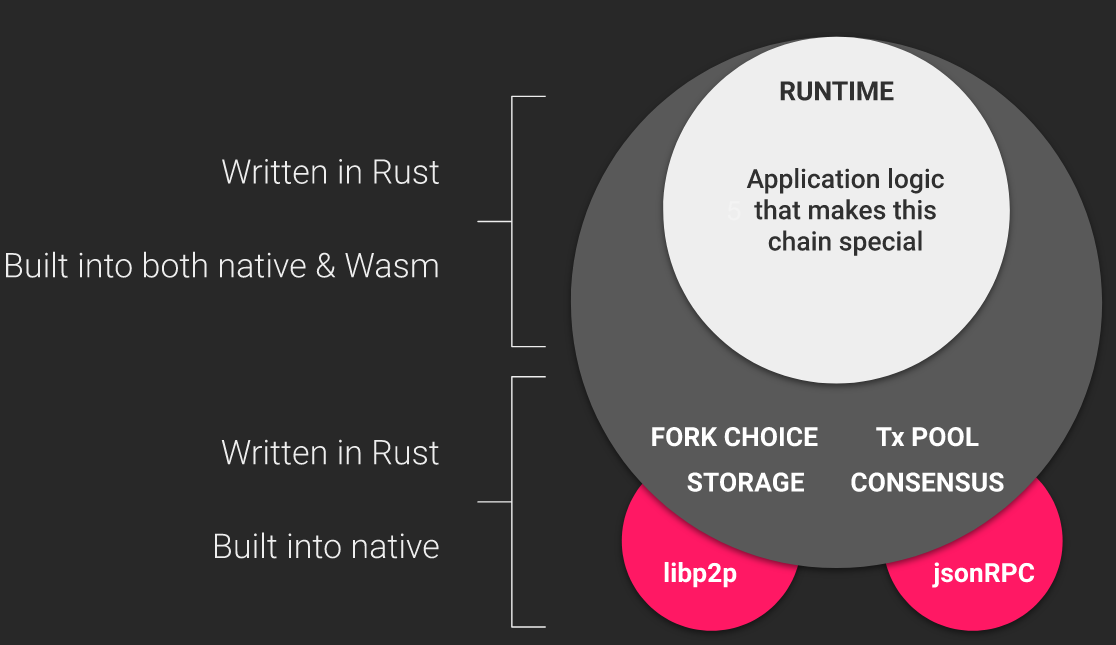

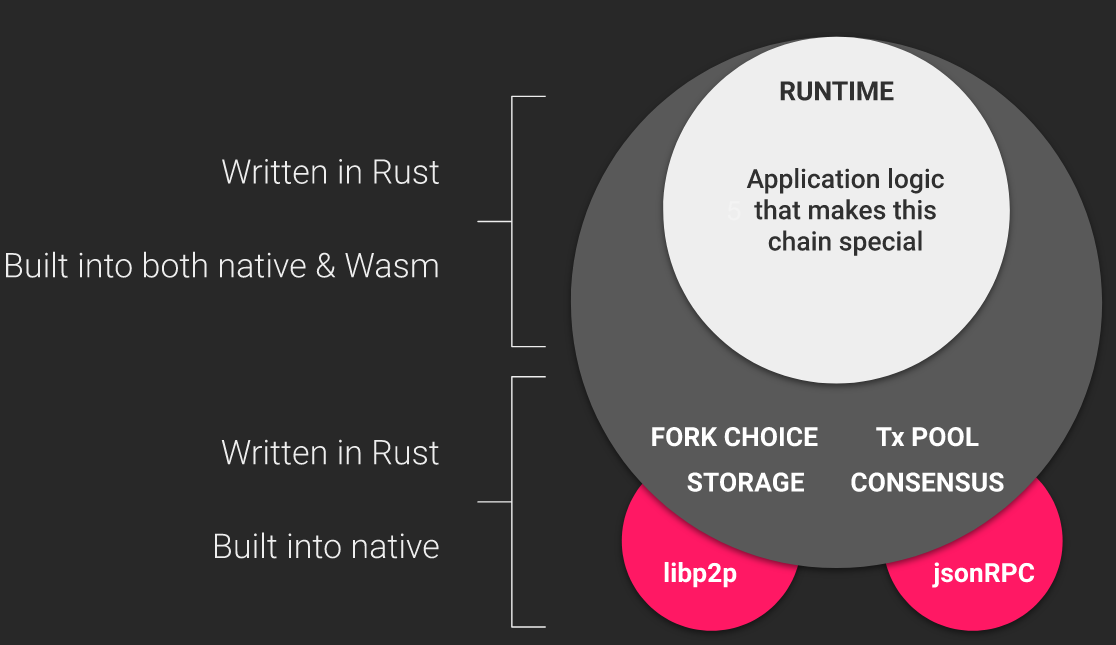

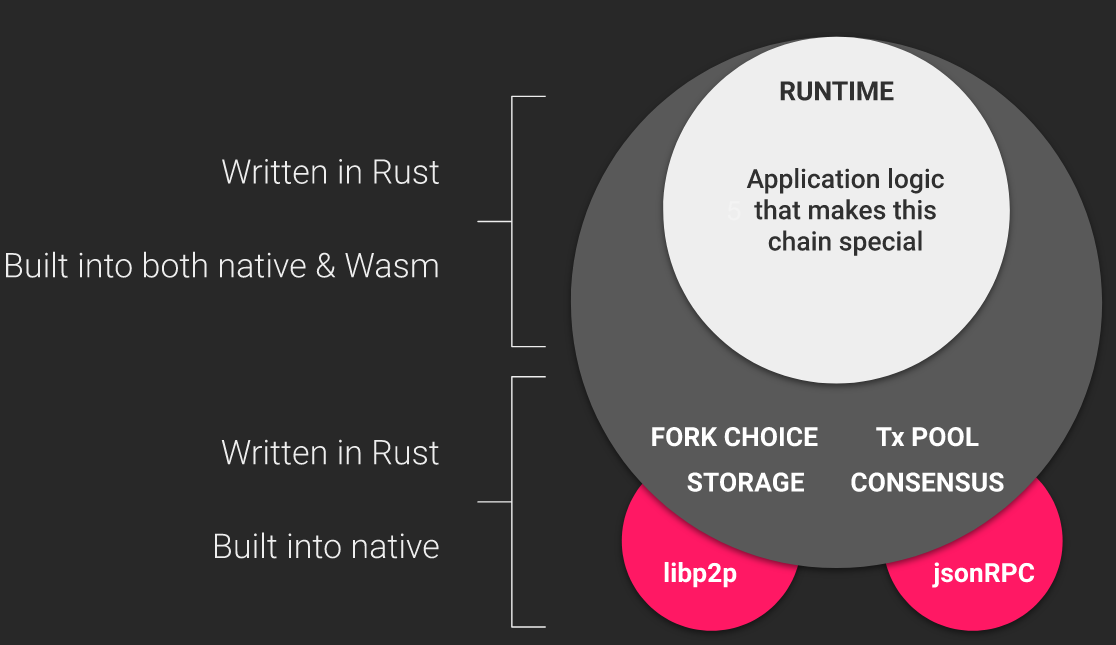

Structure of a Substrate Node

It is useful to recognize that coding is all about abstraction.

To understand how the code in this repository is organized, let's first take a look at how a Substrate node is constructed. Each node has many components that manage things like the transaction queue, communicating over a P2P network, reaching consensus on the state of the blockchain, and the chain's actual runtime logic. Each aspect of the node is interesting in its own right, and the runtime is particularly interesting because it contains the business logic (aka "state transition function") that codifies the chain's functionality.

Much, but not all, of the Recipes focuses on writing runtimes with FRAME, Parity's Framework for composing runtimes from individual building blocks called Pallets. Runtimes built with FRAME typically contain several such pallets. The kitchen node you built previously follows this paradigm.

The Directories in our Kitchen

If you haven't already, you should clone it now. There are five primary directories in this repository.

- Consensus: Consensus engines for use in Substrate nodes.

- Nodes: Complete Substrate nodes ready to run.

- Pallets: Pallets for use in FRAME-based runtimes.

- Runtimes: Runtimes for use in Substrate nodes.

- Text: Source of the book written in markdown. This is what you're reading right now.

Exploring those directories reveals a tree that looks like this

recipes

|

+-- consensus

|

+-- manual-seal

|

+-- sha3pow

|

+-- nodes

|

+-- basic-pow

|

+-- ...

|

+-- rpc-node

|

+-- pallets

|

+-- basic-token

|

+ ...

|

+-- weights

|

+-- runtimes

|

+-- api-runtime

|

+ ...

|

+-- weight-fee-runtime

|

+-- text

Inside the Kitchen Node

Let us take a deeper look at the Kitchen Node.

Looking inside the Kitchen Node's Cargo.toml file we see that it has many dependencies. Most of

them come from Substrate itself. Indeed most parts of this Kitchen Node are not unique or

specialized, and Substrate offers robust implementations that we can use. The runtime does not come

from Substrate. Rather, we use our super-runtime which is in the runtimes folder.

nodes/kitchen-node/Cargo.toml

# This node is compatible with any of the runtimes below

# ---

# Common runtime configured with most Recipes pallets.

runtime = { package = "super-runtime", path = "../../runtimes/super-runtime" }

# Runtime with custom weight and fee calculation.

# runtime = { package = "weight-fee-runtime", path = "../../runtimes/weight-fee-runtime"}

# Runtime with off-chain worker enabled.

# To use this runtime, compile the node with `ocw` feature enabled,

# `cargo build --release --features ocw`.

# runtime = { package = "ocw-runtime", path = "../../runtimes/ocw-runtime" }

# Runtime with custom runtime-api (custom API only used in rpc-node)

# runtime = { package = "api-runtime", path = "../../runtimes/api-runtime" }

# ---

The commented lines, quoted above, show that the Super Runtime is not the only runtime we could have chosen. We could also use the Weight-Fee runtime, and I encourage you to try that experiment (remember, instructions to re-compile the node are in the previous section).

Every node must have a runtime. You may confirm that by looking at the Cargo.toml files of the

other nodes included in our kitchen.

Inside the Super Runtime

Having seen that the Kitchen Node depends on a runtime, let us now look deeper at the Super Runtime.

runtimes/super-runtime/Cargo.toml

# -- snip --

# Substrate Pallets

balances = { package = 'pallet-balances', , ... }

transaction-payment = { package = 'pallet-transaction-payment', ,... }

# Recipe Pallets

adding-machine = { path = "../../pallets/adding-machine", default-features = false }

basic-token = { path = "../../pallets/basic-token", default-features = false }

Here we see that the runtime depends on many pallets. Some of these pallets come from Substrate itself. Indeed, Substrate offers a rich collection of commonly used pallets which you may use in your own runtimes. This runtime also contains several custom pallets that are written right here in our Kitchen.

Common Patterns

We've just observed the general pattern used throughout the recipes. From the inside out, we see a

piece of pallet code stored in pallets/<pallet-name>/src/lib.rs. The pallet is then included into

a runtime by adding its name and relative path in runtimes/<runtime-name>/Cargo.toml. That runtime

is then installed in a node by adding its name and relative path in nodes/<node-name>/Cargo.toml.

Some recipes explore aspects of Blockchain development that are outside of the runtime. Looking back to our node architecture at the beginning of this section, you can imagine that changing a node's RPC or Consensus would be conceptually similar to changing its runtime.

Pallets

Pallets are individual pieces of runtime logic for use in FRAME runtimes. Learn about them in this section of the cookbook.

Hello Substrate

This pallet has one dispatchable call that prints a message to the node's output. Printing to the node log is not common for runtimes, but can be quite useful when debugging and as a "hello world" example. Because this is the first pallet in the recipes, we'll also take a look at the general structure of a pallet.

No Std

The very first line of code tells the rust compiler that this crate should not use rust's standard library except when explicitly told to. This is useful because Substrate runtimes compile to Web Assembly where the standard library is not available.

#![cfg_attr(not(feature = "std"), no_std)]

Imports

Next, you'll find imports that come from various parts of the Substrate framework. All pallets will

import from a few common crates including

frame-support, and

frame-system. Complex pallets will have many

imports. The hello-substrate pallet uses these imports.

use frame_support::{debug, decl_module, dispatch::DispatchResult};

use frame_system::ensure_signed;

use sp_runtime::print;

Tests

Next we see a reference to the tests module. This pallet, as with most recipes pallets, has tests written in a separate file called

tests.rs.

Configuration Trait

Next, each pallet has a configuration trait which is called Config. The configuration trait can be

used to access features from other pallets, or constants that affect

the pallet's behavior. This pallet is simple enough that our configuration trait can remain empty,

although it must still exist.

pub trait Config: frame_system::Config {}

Dispatchable Calls

A Dispatchable call is a function that a blockchain user can call as part of an Extrinsic.

"Extrinsic" is Substrate jargon meaning a call from outside of the chain. Most of the time they are

transactions, and for now it is fine to think of them as transactions. Dispatchable calls are

defined in the

decl_module! macro.

decl_module! {

pub struct Module<T: Config> for enum Call where origin: T::Origin {

/// A function that says hello to the user by printing messages to the node log

#[weight = 10_000]

pub fn say_hello(origin) -> DispatchResult {

// --snip--

}

// More dispatchable calls could go here

}

}

As you can see, our hello-substrate pallet has a dispatchable call that takes a single argument,

called origin. The call returns a

DispatchResult which

can be either Ok(()) indicating that the call succeeded, or an Err which is demonstrated in most other recipes pallets.

Weight Annotations

Right before the hello-substrate function, we see the line #[weight = 10_000]. This line

attaches a default weight to the call. Ultimately weights affect the fees a user will have to pay to

call the function. Weights are a very interesting aspect of developing with Substrate, but they too

shall be covered later in the section on Weights. For now, and for many of

the recipes pallets, we will simply use the default weight as we have done here.

Inside a Dispatchable Call

Let's take a closer look at our dispatchable call.

#[pallet::weight(10_000)]

pub fn say_hello(origin: OriginFor<T>) -> DispatchResultWithPostInfo {

// Ensure that the caller is a regular keypair account

let caller = ensure_signed(origin)?;

// Print a message

print("Hello World");

// Inspecting a variable as well

debug::info!("Request sent by: {:?}", caller);

// Indicate that this call succeeded

Ok(().into())

}

This function essentially does three things. First, it uses the

ensure_signed function to ensure

that the caller of the function was a regular user who owns a private key. This function also

returns who that caller was. We store the caller's identity in the caller variable.

Second, it prints a message and logs the caller. Notice that we aren't using Rust's normal

println! macro, but rather a special

print function and

debug::info! macro. The reason for

this is explained in the next section.

Finally, the call returns Ok(()) to indicate that the call has succeeded. At a glance it seems

that there is no way for this call to fail, but this is not quite true. The ensure_signed

function, used at the beginning, can return an error if the call was not from a signed origin. This

is the first time we're seeing the important paradigm "Verify first, write last". In Substrate

development, it is important that you always ensure preconditions are met and return errors at the

beginning. After these checks have completed, then you may begin the function's computation.

Printing from the Runtime

Printing to the terminal from a Rust program is typically very simple using the println! macro.

However, Substrate runtimes are compiled to both Web Assembly and a regular native binary, and do

not have access to rust's standard library. That means we cannot use the regular println!. I

encourage you to modify the code to try using println! and confirm that it will not compile.

Nonetheless, printing a message from the runtime is useful both for logging information, and also

for debugging.

At the top of our pallet, we imported sp_runtime's

print function. This special function allows

the runtime to pass a message for printing to the outer part of the node which is not compiled to

Wasm and does have access to the standard library and can perform regular IO. This function is only

able to print items that implement the

Printable trait. Luckily all

the primitive types already implement this trait, and you can implement the trait for your own

datatypes too.

Print function note: To actually see the printed messages, we need to use the flag

-lruntime=debug when running the kitchen node. So, for the kitchen node, the command would become

./target/release/kitchen-node --dev -lruntime=debug.

The next line demonstrates using debug::info! macro to log to the screen and also inspecting the

variable's content. The syntax inside the macro is very similar to what regular rust macro

println! takes.

You can specify the logger target with

debug::debug!(target: "mytarget", "called by {:?}", sender);

Now you can filter logs with

kitchen-node --dev -lmytarget=debug

If you do not specify the logger target, it will be set to the crate's name (not to runtime!).

Runtime logger note: When we execute the runtime in native, debug::info! messages are printed.

However, if we execute the runtime in Wasm, then an additional step to initialise

RuntimeLogger is required:

debug::RuntimeLogger::init();

You'll need to call this inside every pallet dispatchable call before logging.

Using Events

Having a transaction included in a block does not guarantee that the function executed successfully. To verify that functions have executed successfully, emit an event at the bottom of the function body.

Events notify the off-chain world of successful state transitions.

Some Prerequisites

When using events, we have to include the Event type in our configuration trait. Although the

syntax is a bit complex, it is the same every time. If you are a skilled Rust programmer you will

recognize this as a series of trait bounds. If

you don't recognize this feature of Rust yet, don't worry; it is the same every time, so you can

just copy it and move on.

#[pallet::config]

pub trait Config: frame_system::Config {

/// Because this pallet emits events, it depends on the runtime's definition of an event.

type Event: From<Event<Self>> + IsType<<Self as frame_system::Config>::Event>;

}

Next we have to add a line of the #[pallet::generate_deposit(pub(super) fn deposit_event)] macro which generates the deposit_event

function we'll use later when emitting our events. Even experienced Rust programmers will not

recognize this syntax because it is unique to this macro. Just copy it each time.

#[pallet::event]

#[pallet::metadata(T::AccountId = "AccountId")]

#[pallet::generate_deposit(pub(super) fn deposit_event)]

pub enum Event<T: Config> {

/// Event documentation should end with an array that provides descriptive names for event

/// parameters. [something, who]

EmitInput(u32),

}

Declaring Events

To declare an event, use the

#[pallet::event] macro. Like any rust

enum, Events have names and can optionally carry data with them. The syntax is slightly different

depending on whether the events carry data of primitive types, or generic types from the pallet's

configuration trait. These two techniques are demonstrated in the simple-event and generic-event

pallets respectively.

Simple Events

The simplest example of an event uses the following syntax

#[pallet::event]

#[pallet::metadata(u32 = "Metadata")]

pub enum Event<T: Config> {

/// Set a value.

ValueSet(u32, T::AccountId),

}

Events with Generic Types

Sometimes, events might contain types from the pallet's Configuration Trait. In this case, it is necessary to specify additional syntax:

#[pallet::event]

pub enum Event<T: Config> {

EmitInput(u32),

}

This example also demonstrates how the where clause can be used to specify type aliasing for more

readable code.

Emitting Events

Events are emitted from dispatchable calls using the deposit_event method.

Events are not emitted on block 0. So any dispatchable calls made during genesis block formation will have no events emitted.

Simple Events

The event is emitted at the bottom of the do_something function body.

Self::deposit_event(Event::EmitInput(new_number));

Events with Generic Types

The syntax for deposit_event now takes the RawEvent type because it is generic over the pallet's

configuration trait.

#[pallet::generate_deposit(pub(super) fn deposit_event)]

Constructing the Runtime

For the first time in the recipes, our pallet has an associated type in its configuration trait. We

must specify this type when implementing its trait. In the case of the Event type, this is

entirely straight forward, and looks the same for both simple events and generic events.

impl simple_event::Config for Runtime {

type Event = Event;

}

Events, like dispatchable calls and storage items, requires a slight change to the line in

construct_runtime!. Notice that the <T> is necessary for generic events.

construct_runtime!(

pub enum Runtime where

Block = Block,

NodeBlock = opaque::Block,

UncheckedExtrinsic = UncheckedExtrinsic

{

// --snip--

GenericEvent: generic_event::{Module, Call, Event<T>},

SimpleEvent: simple_event::{Module, Call, Event},

}

);

Storage Maps

In this recipe, we will see how

to store a mapping from keys to values, similar to Rust's own

HashMap.

Declaring a StorageMap

We declare a single storage map with the following syntax:

#[pallet::storage]

#[pallet::getter(fn simple_map)]

pub(super) type SimpleMap<T: Config> =

StorageMap<_, Blake2_128Concat, T::AccountId, u32, ValueQuery>;

Much of this should look familiar to you from storage values. Reading the line from left to right we have:

SimpleMap- the name of the storage mapget(fn simple_map)- the name of a getter function that will return values from the map.: map hasher(blake2_128_concat)- beginning of the type declaration. This is a map and it will use theblake2_128_concathasher. More on this below.T::AccountId => u32- The specific key and value type of the map. This is a map fromAccountIds tou32s.

Choosing a Hasher

Although the syntax above is complex, most of it should be straightforward if you've understood the

recipe on storage values. The last unfamiliar piece of writing a storage map is choosing which

hasher to use. In general you should choose one of the three following hashers. The choice of hasher

will affect the performance and security of your chain. If you don't want to think much about this,

just choose blake2_128_concat and skip to the next section.

blake2_128_concat

This is a cryptographically secure hash function, and is always safe to use. It is reasonably

efficient, and will keep your storage tree balanced. You must choose this hasher if users of your

chain have the ability to affect the storage keys. In this pallet, the keys are AccountIds. At

first it may seem that the user doesn't affect the AccountId, but in reality a malicious user

can generate thousands of accounts and use the one that will affect the chain's storage tree in the

way the attacker likes. For this reason, we have chosen to use the blake2_128_concat hasher.

twox_64_concat

This hasher is not cryptographically secure, but is more efficient than blake2. Thus it represents trading security for performance. You should not use this hasher if chain users can affect the storage keys. However, it is perfectly safe to use this hasher to gain performance in scenarios where the users do not control the keys. For example, if the keys in your map are sequentially increasing indices and users cannot cause the indices to rapidly increase, then this is a perfectly reasonable choice.

identity

The identity "hasher" is really not a hasher at all, but merely an

identity function that returns the same value it

receives. This hasher is only an option when the key type in your storage map is already a hash,

and is not controllable by the user. If you're in doubt whether the user can influence the key just

use blake2.

The Storage Map API

This pallet demonstrated some of the most common methods available in a storage map including

insert, get, take, and contains_key.

// Insert

<SimpleMap<T>>::insert(&user, entry);

// Get

let entry = <SimpleMap<T>>::get(account);

// Take

let entry = <SimpleMap<T>>::take(&user);

// Contains Key

<SimpleMap<T>>::contains_key(&user)

The rest of the API is documented in the rustdocs on the

StorageMap trait. You do

not need to explicitly use this trait because the decl_storage! macro will do it for you if you

use a storage map.

Cache Multiple Calls

Calls to runtime storage have an associated cost and developers should strive to minimize the number of calls.

#[pallet::storage]

#[pallet::getter(fn some_copy_value)]

pub(super) type SomeCopyValue<T: Config> = StorageValue<_, u32, ValueQuery>;

#[pallet::storage]

#[pallet::getter(fn king_member)]

pub(super) type KingMember<T: Config> = StorageValue<_, T::AccountId, ValueQuery>;

#[pallet::storage]

#[pallet::getter(fn group_members)]

pub(super) type GroupMembers<T: Config> = StorageValue<_, Vec<T::AccountId>, ValueQuery>;

Copy Types

For Copy types, it is easy to reuse

previous storage calls by simply reusing the value, which is automatically cloned upon reuse. In the

code below, the second call is unnecessary:

#[pallet::call]

impl<T: Config> Pallet<T> {

/// (Copy) inefficient way of updating value in storage

///

/// storage value -> storage_value * 2 + input_val

#[pallet::weight(10_000)]

pub fn increase_value_no_cache(

origin: OriginFor<T>,

some_val: u32,

) -> DispatchResultWithPostInfo {

let _ = ensure_signed(origin)?;

let original_call = <SomeCopyValue<T>>::get();

let some_calculation = original_call

.checked_add(some_val)

.ok_or("addition overflowed1")?;

// this next storage call is unnecessary and is wasteful

let unnecessary_call = <SomeCopyValue<T>>::get();

// should've just used `original_call` here because u32 is copy

let another_calculation = some_calculation

.checked_add(unnecessary_call)

.ok_or("addition overflowed2")?;

<SomeCopyValue<T>>::put(another_calculation);

let now = <frame_system::Module<T>>::block_number();

Self::deposit_event(Event::InefficientValueChange(another_calculation, now));

Ok(().into())

}

Instead, the initial call value should be reused. In this example, the SomeCopyValue value is

Copy so we should prefer the following

code without the unnecessary second call to storage:

#[pallet::weight(10_000)]

pub fn increase_value_w_copy(

origin: OriginFor<T>,

some_val: u32,

) -> DispatchResultWithPostInfo {

let _ = ensure_signed(origin)?;

let original_call = <SomeCopyValue<T>>::get();

let some_calculation = original_call

.checked_add(some_val)

.ok_or("addition overflowed1")?;

// uses the original_call because u32 is copy

let another_calculation = some_calculation

.checked_add(original_call)

.ok_or("addition overflowed2")?;

<SomeCopyValue<T>>::put(another_calculation);

let now = <frame_system::Module<T>>::block_number();

Self::deposit_event(Event::BetterValueChange(another_calculation, now));

Ok(().into())

}

Clone Types

If the type was not Copy, but was Clone,

then it is still better to clone the value in the method than to make another call to runtime

storage.

The runtime methods enable the calling account to swap the T::AccountId value in storage if

- the existing storage value is not in

GroupMembersAND - the calling account is in

GroupMembers

The first implementation makes a second unnecessary call to runtime storage instead of cloning the

call for existing_key:

#[pallet::weight(10_000)]

pub fn swap_king_no_cache(origin: OriginFor<T>) -> DispatchResultWithPostInfo {

let new_king = ensure_signed(origin)?;

let existing_king = <KingMember<T>>::get();

// only places a new account if

// (1) the existing account is not a member &&

// (2) the new account is a member

ensure!(

!Self::is_member(&existing_king),

"current king is a member so maintains priority"

);

ensure!(

Self::is_member(&new_king),

"new king is not a member so doesn't get priority"

);

// BAD (unnecessary) storage call

let old_king = <KingMember<T>>::get();

// place new king

<KingMember<T>>::put(new_king.clone());

Self::deposit_event(Event::InefficientKingSwap(old_king, new_king));

Ok(().into())

}

If the existing_key is used without a clone in the event emission instead of old_king, then

the compiler returns the following error:

error[E0382]: use of moved value: `existing_king`

--> src/lib.rs:93:63

|

80 | let existing_king = <KingMember<T>>::get();

| ------------- move occurs because `existing_king` has type `<T as frame_system::Config>::AccountId`, which does not implement the `Copy` trait

...

85 | ensure!(!Self::is_member(existing_king), "is a member so maintains priority");

| ------------- value moved here

...

93 | Self::deposit_event(RawEvent::InefficientKingSwap(existing_king, new_king));

| ^^^^^^^^^^^^^ value used here after move

error: aborting due to previous error

For more information about this error, try `rustc --explain E0382`.

error: Could not compile `storage-cache`.

To learn more, run the command again with --verbose.

Fixing this only requires cloning the original value before it is moved:

#[pallet::weight(10_000)]

pub fn swap_king_with_cache(origin: OriginFor<T>) -> DispatchResultWithPostInfo {

let new_king = ensure_signed(origin)?;

let existing_king = <KingMember<T>>::get();

// prefer to clone previous call rather than repeat call unnecessarily

let old_king = existing_king.clone();

// only places a new account if

// (1) the existing account is not a member &&

// (2) the new account is a member

ensure!(

!Self::is_member(&existing_king),

"current king is a member so maintains priority"

);

ensure!(

Self::is_member(&new_king),

"new king is not a member so doesn't get priority"

);

// <no (unnecessary) storage call here>

// place new king

<KingMember<T>>::put(new_king.clone());

Self::deposit_event(Event::BetterKingSwap(old_king, new_king));

Ok(().into())

}

Not all types implement Copy or

Clone, so it is important to discern other

patterns that minimize and alleviate the cost of calls to storage.

Using Vectors as Sets

A Set is an unordered data structure that stores entries without duplicates. Substrate's storage API does not provide a way to declare sets explicitly, but they can be implemented using either vectors or maps.

This recipe demonstrates how to implement a storage set on top of a vector, and explores the

performance of the implementation. When implementing a set in your own runtime, you should compare

this technique to implementing a map-set.

In this pallet we implement a set of AccountIds. We do not use the set for anything in this

pallet; we simply maintain the set. Using the set is demonstrated in the recipe on

pallet coupling. We provide dispatchable calls to add and remove members,

ensuring that the number of members never exceeds a hard-coded maximum.

/// A maximum number of members. When membership reaches this number, no new members may join.

pub const MAX_MEMBERS: usize = 16;

Storage Item

We will store the members of our set in a Rust

Vec. A Vec is a collection of elements that

is ordered and may contain duplicates. Because the Vec provides more functionality than our set

needs, we are able to build a set from the Vec. We declare our single storage item as so

#[pallet::storage]

#[pallet::getter(fn members)]

pub(super) type Members<T: Config> = StorageValue<_, Vec<T::AccountId>, ValueQuery>;

In order to use the Vec successfully as a set, we will need to manually ensure that no duplicate

entries are added. To ensure reasonable performance, we will enforce that the Vec always remains

sorted. This allows for quickly determining whether an item is present using a

binary search.

Adding Members

Any user may join the membership set by calling the add_member dispatchable, providing they are

not already a member and the membership limit has not been reached. We check for these two

conditions first, and then insert the new member only after we are sure it is safe to do so. This is

an example of the mnemonic idiom, "verify first write last".

#[pallet::weight(10_000)]

pub fn add_member(origin: OriginFor<T>) -> DispatchResultWithPostInfo {

let new_member = ensure_signed(origin)?;

let mut members = Members::<T>::get();

ensure!(

members.len() < MAX_MEMBERS,

Error::<T>::MembershipLimitReached

);

// We don't want to add duplicate members, so we check whether the potential new

// member is already present in the list. Because the list is always ordered, we can

// leverage the binary search which makes this check O(log n).

match members.binary_search(&new_member) {

// If the search succeeds, the caller is already a member, so just return

Ok(_) => Err(Error::<T>::AlreadyMember.into()),

// If the search fails, the caller is not a member and we learned the index where

// they should be inserted

Err(index) => {

members.insert(index, new_member.clone());

Members::<T>::put(members);

Self::deposit_event(Event::MemberAdded(new_member));

Ok(().into())

}

}

}

If it turns out that the caller is not already a member, the binary search will fail. In this case

it still returns the index into the Vec at which the member would have been stored had they been

present. We then use this information to insert the member at the appropriate location, thus

maintaining a sorted Vec.

Removing a Member

Removing a member is straightforward. We begin by looking for the caller in the list. If not present, there is no work to be done. If the caller is present, the search algorithm returns her index, and she can be removed.

#[pallet::weight(10_000)]

pub fn remove_member(origin: OriginFor<T>) -> DispatchResultWithPostInfo {

let old_member = ensure_signed(origin)?;

let mut members = Members::<T>::get();

// We have to find out if the member exists in the sorted vec, and, if so, where.

match members.binary_search(&old_member) {

// If the search succeeds, the caller is a member, so remove her

Ok(index) => {

members.remove(index);

Members::<T>::put(members);

Self::deposit_event(Event::MemberRemoved(old_member));

Ok(().into())

}

// If the search fails, the caller is not a member, so just return

Err(_) => Err(Error::<T>::NotMember.into()),

}

}

Performance

Now that we have built our set, let's analyze its performance in some common operations.

Membership Check

In order to check for the presence of an item in a vec-set, we make a single storage read, decode

the entire vector, and perform a binary search.

DB Reads: O(1) Decoding: O(n) Search: O(log n)

Updating

Updates to the set, such as adding and removing members as we demonstrated, requires first

performing a membership check. It also requires re-encoding the entire Vec and storing it back in

the database. Finally, it still costs the normal

amortized constant time associated with mutating a

Vec.

DB Writes: O(1) Encoding: O(n)

Iteration

Iterating over all items in a vec-set is achieved by using the Vec's own

iter method. The entire set can

be read from storage in one go, and each item must be decoded. Finally, the actual processing you do

on the items will take some time.

DB Reads: O(1) Decoding: O(n) Processing: O(n)

Because accessing the database is a relatively slow operation, reading the entire list in a single

read is a big win. If you need to iterate over the data frequently, you may want a vec-set.

A Note on Weights

It is always important that the weight associated with your dispatchables represent the actual time it takes to execute them. In this pallet, we have provided an upper bound on the size of the set, which places an upper bound on the computation - this means we can use constant weight annotations. Your set operations should either have a maximum size or a custom weight function that captures the computation appropriately.

Using Maps as Sets

A Set is an unordered data structure that stores entries without duplicates. Substrate's storage API does not provide a way to declare sets explicitly, but they can be implemented using either vectors or maps.

This recipe shows how to implement a storage set on top of a map, and explores the performance of

the implementation. When implementing a set in your own runtime, you should compare this technique

to implementing a vec-set.

In this pallet we implement a set of AccountIds. We do not use the set for anything in this

pallet; we simply maintain its membership. Using the set is demonstrated in the recipe on

pallet coupling. We provide dispatchable calls to add and remove members,

ensuring that the number of members never exceeds a hard-coded maximum.

/// A maximum number of members. When membership reaches this number, no new members may join.

pub const MAX_MEMBERS: u32 = 16;

Storage Item

We will store the members of our set as the keys in one of Substrate's

StorageMaps. There is also

a recipe specifically about using storage maps. The storage map itself does not

track its size internally, so we introduce a second storage value for this purpose.

#[pallet::storage]

#[pallet::getter(fn members)]

pub(super) type Members<T: Config> =

StorageMap<_, Blake2_128Concat, T::AccountId, (), ValueQuery>;

#[pallet::storage]

pub(super) type MemberCount<T> = StorageValue<_, u32, ValueQuery>;

The value stored in the map is () because we only care about the keys.

Adding Members

Any user may join the membership set by calling the add_member dispatchable, so long as they are

not already a member and the membership limit has not been reached. We check for these two

conditions first, and then insert the new member only after we are sure it is safe to do so.

#[pallet::weight(10_000)]

pub fn add_member(origin: OriginFor<T>) -> DispatchResultWithPostInfo {

let new_member = ensure_signed(origin)?;

let member_count = MemberCount::<T>::get();

ensure!(

member_count < MAX_MEMBERS,

Error::<T>::MembershipLimitReached

);

When we successfully add a new member, we also manually update the size of the set.

Removing a Member

Removing a member is straightforward. We begin by looking for the caller in the list. If not present, there is no work to be done. If the caller is present, we simply remove them and update the size of the set.

#[pallet::weight(10_000)]

pub fn remove_member(origin: OriginFor<T>) -> DispatchResultWithPostInfo {

let old_member = ensure_signed(origin)?;

ensure!(

Members::<T>::contains_key(&old_member),

Error::<T>::NotMember

);

Performance

Now that we have built our set, let's analyze its performance in some common operations.

Membership Check

In order to check for the presence of an item in a map set, we make a single storage read. If we only care about the presence or absence of the item, we don't even need to decode it. This constant time membership check is the greatest strength of a map set.

DB Reads: O(1)

Updating

Updates to the set, such as adding and removing members as we demonstrated, requires first performing a membership check. Additions also require encooding the new item.

DB Reads: O(1) Encoding: O(1) DB Writes: O(1)

If your set operations will require a lot of membership checks or mutation of individual items, you

may want a map-set.

Iteration

Iterating over all items in a map-set is achieved by using the

IterableStorageMap trait,

which iterates (key, value) pairs (although in this case, we don't care about the values). Because

each map entry is stored as an individual trie node, iterating a map set requires a database read

for each item. Finally, the actual processing of the items will take some time.

DB Reads: O(n) Decoding: O(n) Processing: O(n)

Because accessing the database is a relatively slow operation, returning to the database for each

item is quite expensive. If your set operations will require frequent iterating, you will probably

prefer a vec-set.

A Note on Weights

It is always important that the weight associated with your dispatchables represent the actual time it takes to execute them. In this pallet, we have provided an upper bound on the size of the set, which places an upper bound on the computation - this means we can use constant weight annotations. Your set operations should either have a maximum size or a custom weight function that captures the computation appropriately.

Efficent Subgroup Removal by Subkey: Double Maps

For some runtimes, it may be necessary to remove a subset of values in a key-value mapping. If the

subset maintain an associated identifier type, this can be done in a clean way with the

double_map via the

remove_prefix api.

pub type GroupIndex = u32; // this is Encode (which is necessary for double_map)

#[pallet::storage]

#[pallet::getter(fn member_score)]

pub(super) type MemberScore<T: Config> = StorageDoubleMap<

_,

Blake2_128Concat,

GroupIndex,

Blake2_128Concat,

T::AccountId,

u32,

ValueQuery,

>;

#[pallet::storage]

#[pallet::getter(fn group_membership)]

pub(super) type GroupMembership<T: Config> =

StorageMap<_, Blake2_128Concat, T::AccountId, GroupIndex, ValueQuery>;

#[pallet::storage]

#[pallet::getter(fn all_members)]

pub(super) type AllMembers<T: Config> = StorageValue<_, Vec<T::AccountId>, ValueQuery>;

For the purposes of this example, store the scores of each member in a map that associates this

u32 value with two keys: (1) a GroupIndex identifier, and (2) the member's AccountId. This

allows for efficient removal of all values associated with a specific GroupIndex identifier.

#[pallet::weight(10_000)]

pub fn remove_group_score(

origin: OriginFor<T>,

group: GroupIndex,

) -> DispatchResultWithPostInfo {

let member = ensure_signed(origin)?;

let group_id = <GroupMembership<T>>::get(member);

// check that the member is in the group

ensure!(

group_id == group,

"member isn't in the group, can't remove it"

);

// remove all group members from MemberScore at once

<MemberScore<T>>::remove_prefix(&group_id);

Self::deposit_event(Event::RemoveGroup(group_id));

Ok(().into())

}

Using and Storing Structs

In Rust, a struct, or structure, is a custom data type that lets you name and package together

multiple related values that make up a meaningful group. If you’re familiar with an object-oriented

language, a struct is like an object’s data attributes (read more in

The Rust Book).

Defining a Struct

To define a simple custom struct for the runtime, the following syntax may be used:

#[derive(Encode, Decode, Default, Clone, PartialEq)]

pub struct MyStruct {

some_number: u32,

optional_number: Option<u32>,

}

In the code snippet above, the

derive macro is declared to ensure

MyStruct conforms to shared behavior according to the specified

traits:

Encode, Decode, Default, Clone, PartialEq. If you wish the store this struct in blockchain

storage, you will need to derive (or manually ipmlement) each of these traits.

To use the Encode and Decode traits, it is necessary to import them.

use frame_support::codec::{Encode, Decode};

Structs with Generic Fields

The simple struct shown earlier only uses Rust primitive types for its fields. In the common case where you want to store types that come from your pallet's configuration trait (or the configuration trait of another pallet in your runtime), you must use generic type parameters in your struct's definition.

#[derive(Encode, Decode, Clone, Default, RuntimeDebug)]

pub struct InnerThing<Hash, Balance> {

number: u32,

hash: Hash,

balance: Balance,

}

Here you can see that we want to store items of type Hash and Balance in the struct. Because

these types come from the system and balances pallets' configuration traits, we must specify them as

generics when declaring the struct.

It is often convenient to make a type alias that takes T, your pallet's configuration trait, as a

single type parameter. Doing so simply saves you typing in the future.

type InnerThingOf<T> = InnerThing<<T as frame_system::Config>::Hash, <T as pallet_balances::Config>::Balance>;

Structs in Storage

Using one of our structs as a storage item is not significantly different than using a primitive

type. When using a generic struct, we must supply all of the generic type parameters. This snippet

shows how to supply thos parameters when you have a type alias (like we do for InnerThing) as well

as when you don't. Whether to include the type alias is a matter of style and taste, but it is

generally preferred when the entire type exceeds the preferred line length.

#[pallet::storage]

#[pallet::getter(fn inner_things_by_numbers)]

pub(super) type InnerThingsByNumbers<T> =

StorageMap<_, Blake2_128Concat, u32, InnerThingOf<T>, ValueQuery>;

#[pallet::storage]

#[pallet::getter(fn super_things_by_super_numbers)]

pub(super) type SuperThingsBySuperNumbers<T: Config> =

StorageMap<_, Blake2_128Concat, u32, SuperThing<T::Hash, T::Balance>, ValueQuery>;

Interacting with the storage maps is now exactly as it was when we didn't use any custom structs

#[pallet::weight(10_000)]

pub fn insert_inner_thing(

origin: OriginFor<T>,

number: u32,

hash: T::Hash,

balance: T::Balance,

) -> DispatchResultWithPostInfo {

let _ = ensure_signed(origin)?;

let thing = InnerThing {

number,

hash,

balance,

};

<InnerThingsByNumbers<T>>::insert(number, thing);

Self::deposit_event(Event::NewInnerThing(number, hash, balance));

Ok(().into())

}

Nested Structs

Structs can also contain other structs as their fields. We have demonstrated this with the type

SuperThing. As you see, any generic types needed by the inner struct must also be supplied to the

outer.

#[derive(Encode, Decode, Default, RuntimeDebug)]

pub struct SuperThing<Hash, Balance> {

super_number: u32,

inner_thing: InnerThing<Hash, Balance>,

}

Ringbuffer Queue

Building a transient adapter on top of storage.

This pallet provides a trait and implementation for a ringbuffer that abstracts over storage items and presents them as a FIFO queue.

When building more sophisticated pallets you might notice a need for more complex data structures

stored in storage. This recipe shows how to build a transient storage adapter by walking through the

implementation of a ringbuffer FIFO queue. The adapter in this recipe manages a queue that is

persisted as a StorageMap and a (start, end) range in storage.

The

ringbuffer-queue/src/lib.rs

file contains the usage of the transient storage adapter while

ringbuffer-queue/src/ringbuffer.rs

contains the implementation.

Defining the RingBuffer Trait

First we define the queue interface we want to use:

pub trait RingBufferTrait<Item>

where

Item: Codec + EncodeLike,

{

/// Store all changes made in the underlying storage.

fn commit(&self);

/// Push an item onto the end of the queue.

fn push(&mut self, i: Item);

/// Pop an item from the start of the queue.

fn pop(&mut self) -> Option<Item>;

/// Return whether the queue is empty.

fn is_empty(&self) -> bool;

}

It defines the usual push, pop and is_empty functions we expect from a queue as well as a

commit function that will be used to sync the changes made to the underlying storage.

Specifying the RingBuffer Transient

Now we want to add an implementation of the trait. We will be storing the start and end of the ringbuffer separately from the actual items and will thus need to store these in our struct:

pub struct RingBufferTransient<Index>

where

Index: Codec + EncodeLike + Eq + Copy,

{

start: Index,

end: Index,

}

Defining the Storage Interface

In order to access the underlying storage we will also need to include the bounds (we will call the

type B) and the item storage (whose type will be M). In order to specify the constraints on the

storage map (M) we will also need to specify the Item type. This results in the following struct

definition:

pub struct RingBufferTransient<Item, B, M, Index>

where

Item: Codec + EncodeLike,

B: StorageValue<(Index, Index), Query = (Index, Index)>,

M: StorageMap<Index, Item, Query = Item>,

Index: Codec + EncodeLike + Eq + Copy,

{

start: Index,

end: Index,

_phantom: PhantomData<(Item, B, M)>,

}

The bounds B will be a StorageValue storing a tuple of indices (Index, Index). The item

storage will be a StorageMap mapping from our Index type to the Item type. We specify the

associated Query type for both of them to help with type inference (because the value returned can

be different from the stored representation).

The Codec and

EncodeLike

type constraints make sure that both items and indices can be stored in storage.

We need the PhantomData in order

to "hold on to" the types during the lifetime of the transient object.

The Complete Type

There are two more alterations we will make to our struct to make it work well:

type DefaultIdx = u16;

pub struct RingBufferTransient<Item, B, M, Index = DefaultIdx>

where

Item: Codec + EncodeLike,

B: StorageValue<(Index, Index), Query = (Index, Index)>,

M: StorageMap<Index, Item, Query = Item>,

Index: Codec + EncodeLike + Eq + WrappingOps + From<u8> + Copy,

{

start: Index,

end: Index,

_phantom: PhantomData<(Item, B, M)>,

}

We specify a default type for Index and define it as u16 to allow for 65536 entries in the

ringbuffer per default. We also add the WrappingOps and From<u8> type bounds to enable the kind

of operations we need in our implementation. More details in the implementation

section, especially in the WrappingOps subsection.

Implementation of the RingBuffer

Now that we have the type definition for RingBufferTransient we need to write the implementation.

Instantiating the Transient

First we need to specify how to create a new instance by providing a new function:

impl<Item, B, M, Index> RingBufferTransient<Item, B, M, Index>

where // ... same where clause as the type, elided here

{

pub fn new() -> RingBufferTransient<Item, B, M, Index> {

let (start, end) = B::get();

RingBufferTransient {

start, end, _phantom: PhantomData,

}

}

}

Here we access the bounds stored in storage to initialize the transient.

Aside: Of course we could also provide a

with_boundsfunction that takes the bounds as a parameter. Feel free to add that function as an exercise.

Second Aside: This

B::get()is one of the reasons for specifying theQueryassociated type on theStorageValuetype constraint.

Implementing the RingBufferTrait

We will now implement the RingBufferTrait:

impl<Item, B, M, Index> RingBufferTrait<Item> for RingBufferTransient<Item, B, M, Index>

where // same as the struct definition

Item: Codec + EncodeLike,

B: StorageValue<(Index, Index), Query = (Index, Index)>,

M: StorageMap<Index, Item, Query = Item>,

Index: Codec + EncodeLike + Eq + WrappingOps + From<u8> + Copy,

{

fn commit(&self) {

B::put((self.start, self.end));

}

commit just consists of putting the potentially changed bounds into storage. You will notice that

we don't update the bounds' storage when changing them in the other functions.

fn is_empty(&self) -> bool {

self.start == self.end

}

The is_empty function just checks whether the start and end bounds have the same value to

determine whether the queue is empty, thus avoiding expensive storage accesses. This means we need

to uphold the corresponding invariant in the other (notably the push) functions.

fn push(&mut self, item: Item) {

M::insert(self.end, item);

// this will intentionally overflow and wrap around when bonds_end

// reaches `Index::max_value` because we want a ringbuffer.

let next_index = self.end.wrapping_add(1.into());

if next_index == self.start {

// queue presents as empty but is not

// --> overwrite the oldest item in the FIFO ringbuffer

self.start = self.start.wrapping_add(1.into());

}

self.end = next_index;

}

In the push function, we insert the pushed item into the map and calculate the new bounds by

using the wrapping_add function. This way our ringbuffer will wrap around when reaching

max_value of the Index type. This is why we need the WrappingOps type trait for Index.

The if is necessary because we need to keep the invariant that start == end means that the queue

is empty, otherwise we would need to keep track of this state separately. We thus "toss away" the

oldest item in the queue if a new item is pushed into a full queue by incrementing the start index.

Note: The

WrappingOpsTraitThe ringbuffer should be agnostic to the concrete

Indextype used. In order to decrement and increment the start and end index, though, any concrete type needs to implementwrapping_addandwrapping_sub. Becausestddoes not provide such a trait, we need another way to require this behavior. We just implement our own traitWrappingOpsfor the types we want to support (u8,u16,u32andu64).

The last function we implement is pop:

fn pop(&mut self) -> Option<Item> {

if self.is_empty() {

return None;

}

let item = M::take(self.start);

self.start = self.start.wrapping_add(1.into());

item.into()

}

We can return None on is_empty because we are upholding the invariant. If the queue is not empty

we take the value at self.start from storage, i.e. the first value is removed from storage and

passed to us. We then increment self.start to point to the new first item of the queue, again

using the wrapping_add to get the ringbuffer behavior.

Implementing Drop

In order to make the usage more ergonomic and to avoid synchronization errors (where the storage map

diverges from the bounds) we also implement the

Drop trait:

impl<Item, B, M, Index> Drop for RingBufferTransient<Item, B, M, Index>

where // ... same where clause elided

{

fn drop(&mut self) {

<Self as RingBufferTrait<Item>>::commit(self);

}

}

On drop, we commit the bounds to storage. With this implementation of Drop, commit is called

when our transient goes out of scope, making sure that the storage state is consistent for the next

call to the using pallet.

Typical Usage

The

lib.rs

file of the pallet shows typical usage of the transient.

impl<T: Config> Module<T> {

fn queue_transient() -> Box<dyn RingBufferTrait<ValueStruct>> {

Box::new(RingBufferTransient::<

ValueStruct,

<Self as Store>::BufferRange,

<Self as Store>::BufferMap,

BufferIndex,

>::new())

}

}

First we define a constructor function (queue_transient) so we don't have to specify the types

every time we want to access the transient. This function constructs a ringbuffer transient and

returns it as a boxed trait object. See the Rust book's section on

trait objects

for an explanation of why we need a boxed trait object (defined with the syntax dyn TraitName)

when using dynamic dispatch.

The add_multiple function shows the actual typical usage of our transient:

pub fn add_multiple(origin, integers: Vec<i32>, boolean: bool) -> DispatchResult {

let _user = ensure_signed(origin)?;

let mut queue = Self::queue_transient();

for integer in integers {

queue.push(ValueStruct{ integer, boolean });

}

Ok(())

} // commit happens on drop

Here we use the queue_transient function defined above to get a queue object. We then push

into it repeatedly with commit happening on drop of the queue object at the end of the

function. pop works analogously and can of course be intermixed with pushes.

Basic Token

This recipe demonstrates a simple but functional token in a pallet.

Mapping Accounts to Balances

Mappings are a very powerful primitive. A stateful cryptocurrency might store a mapping between accounts and balances. Likewise, mappings prove useful when representing owned data. By tracking ownership with maps, it is easy manage permissions for modifying values specific to individual users or groups.

Storage Items

The primary storage item is the mapping between AccountIds and Balances described above. Every account that holds tokens appears as a key in that map and its value is the number of tokens it holds.

The next two storage items set the total supply of the token and keep track of whether the token has been initialized yet.

#[pallet::storage]

#[pallet::getter(fn get_balance)]

pub(super) type Balances<T: Config> =

StorageMap<_, Blake2_128Concat, T::AccountId, u64, ValueQuery>;

#[pallet::type_value]

pub(super) fn TotalSupplyDefaultValue<T: Config>() -> u64 {

21000000

}

#[pallet::storage]

#[pallet::getter(fn is_init)]

pub(super) type Init<T: Config> = StorageValue<_, bool, ValueQuery>;

Because users can influence the keys in our storage map, we've chosen the blake2_128_concat hasher

as described in the recipe on storage mapss.

Events and Errors

The pallet defines events and errors for common lifecycle events such as successful and failed transfers, and successful and failed initialization.

#[pallet::event]

#[pallet::metadata(T::AccountId = "AccountId")]

#[pallet::generate_deposit(pub (super) fn deposit_event)]

pub enum Event<T: Config> {

/// Token was initialized by user

Initialized(T::AccountId),

/// Tokens successfully transferred between users

Transfer(T::AccountId, T::AccountId, u64), // (from, to, value)

}

#[pallet::error]

pub enum Error<T> {

/// Attempted to initialize the token after it had already been initialized.

AlreadyInitialized,

/// Attempted to transfer more funds than were available

InsufficientFunds,

}

Initializing the Token

In order for the token to be useful, some accounts need to own it. There are many possible ways to

initialize a token including genesis config, claims process, lockdrop, and many more. This pallet

will use a simple process where the first user to call the init function receives all of the

funds. The total supply is hard-coded in the pallet in a fairly naive way: It is specified as the

default value in the decl_storage! block.

#[pallet::weight(10_000)]

pub fn init(_origin: OriginFor<T>) -> DispatchResultWithPostInfo {

let sender = ensure_signed(_origin)?;

ensure!(!Self::is_init(), <Error<T>>::AlreadyInitialized);

<Balances<T>>::insert(sender, Self::total_supply());

Init::<T>::put(true);

Ok(().into())

}

As usual, we first check for preconditions. In this case that means making sure that the token is not already initialized. Then we do any mutation necessary.

Transferring Tokens

To transfer tokens, a user who owns some tokens calls the transfer method specifying the recipient

and the amount of tokens to transfer as parameters.

We again check for error conditions before mutating storage. In this case it is not necessary to

check whether the token has been initialized. If it has not, nobody has any funds and the transfer

will simply fail with InsufficientFunds.

#[pallet::weight(10_000)]

pub fn transfer(

_origin: OriginFor<T>,

to: T::AccountId,

value: u64,

) -> DispatchResultWithPostInfo {

let sender = ensure_signed(_origin)?;

let sender_balance = Self::get_balance(&sender);

let receiver_balance = Self::get_balance(&to);

// Calculate new balances

let updated_from_balance = sender_balance

.checked_sub(value)

.ok_or(<Error<T>>::InsufficientFunds)?;

let updated_to_balance = receiver_balance

.checked_add(value)

.expect("Entire supply fits in u64; qed");

// Write new balances to storage

<Balances<T>>::insert(&sender, updated_from_balance);

<Balances<T>>::insert(&to, updated_to_balance);

Self::deposit_event(Event::Transfer(sender, to, value));

Ok(().into())

}

Don't Panic!

When adding the incoming balance, notice the peculiar .expect method. In Substrate, your runtime must never panic. To encourage careful thinking about your code, you use the .expect

method and provide a proof of why the potential panic will never happen.

Configurable Pallet Constants

To declare constant values within a runtime, it is necessary to import the

Get trait from frame_support

use frame_support::traits::Get;

Configurable constants are declared as associated types in the pallet's configuration trait using

the Get<T> syntax for any type T.

pub trait Config: frame_system::Config {

type Event: From<Event> + Into<<Self as frame_system::Config>::Event>;

/// Maximum amount added per invocation

type MaxAddend: Get<u32>;

/// Frequency with which the stored value is deleted

type ClearFrequency: Get<Self::BlockNumber>;

}

In order to make these constants and their values appear in the runtime metadata, it is necessary to

declare them with the const syntax. Usually constants are declared at

the top of this block, right after fn deposit_event.

#[pallet::config]

pub trait Config: frame_system::Config {

type Event: From<Event> + IsType<<Self as frame_system::Config>::Event>;

/// Maximum amount added per invocation

type MaxAddend: Get<u32>;

/// Frequency with which the stored value is deleted

type ClearFrequency: Get<Self::BlockNumber>;

}

This example manipulates a single value in storage declared as SingleValue.

#[pallet::storage]

#[pallet::getter(fn single_value)]

pub(super) type SingleValue<T: Config> = StorageValue<_, u32, ValueQuery>;

SingleValue is set to 0 every ClearFrequency number of blocks in the on_finalize function

that runs at the end of blocks execution.

#[pallet::hooks]

impl<T: Config> Hooks<T::BlockNumber> for Pallet<T> {

fn on_finalize(n: T::BlockNumber) {

if (n % T::ClearFrequency::get()).is_zero() {

let c_val = SingleValue::<T>::get();

SingleValue::<T>::put(0u32);

Self::deposit_event(Event::Cleared(c_val));

}

}

}

Signed transactions may invoke the add_value runtime method to increase SingleValue as long as

each call adds less than MaxAddend. There is no anti-sybil mechanism so a user could just split a

larger request into multiple smaller requests to overcome the MaxAddend, but overflow is still

handled appropriately.

#[pallet::weight(10_000)]

pub fn add_value(origin: OriginFor<T>, val_to_add: u32) -> DispatchResultWithPostInfo {

let _ = ensure_signed(origin)?;

ensure!(

val_to_add <= T::MaxAddend::get(),

"value must be <= maximum add amount constant"

);

// previous value got

let c_val = SingleValue::<T>::get();

// checks for overflow when new value added

let result = match c_val.checked_add(val_to_add) {

Some(r) => r,

None => {

return Err(DispatchErrorWithPostInfo {

post_info: PostDispatchInfo::from(()),

error: DispatchError::Other("Addition overflowed"),

})

}

};

SingleValue::<T>::put(result);

Self::deposit_event(Event::Added(c_val, val_to_add, result));

Ok(().into())

}

In more complex patterns, the constant value may be used as a static, base value that is scaled by a multiplier to incorporate stateful context for calculating some dynamic fee (i.e. floating transaction fees).

Supplying the Constant Value

When the pallet is included in a runtime, the runtime developer supplies the value of the constant

using the

parameter_types! macro. This

pallet is included in the super-runtime where we see the following macro invocation and trait

implementation.

#![allow(unused)] fn main() { parameter_types! { pub const MaxAddend: u32 = 1738; pub const ClearFrequency: u32 = 10; } #[pallet::config] pub trait Config: frame_system::Config { type Event: From<Event> + IsType<<Self as frame_system::Config>::Event>; /// Maximum amount added per invocation type MaxAddend: Get<u32>; /// Frequency with which the stored value is deleted type ClearFrequency: Get<Self::BlockNumber>; } }

Simple Crowdfund

This pallet demonstrates a simple on-chain crowdfunding app where participants can pool funds toward a common goal. It demonstrates a pallet that controls multiple token accounts, and storing data in child storage.

Basic Usage

Any user can start a crowdfund by specifying a goal amount for the crowdfund, an end time, and a beneficiary who will receive the pooled funds if the goal is reached by the end time. If the fund is not successful, it enters into a retirement period when contributors can reclaim their pledged funds. Finally, an unsuccessful fund can be dissolved, sending any remaining tokens to the user who dissolves it.

Configuration Trait

We begin by declaring our configuration trait. In addition to the ubiquitous Event type, our

crowdfund pallet will depend on a notion of

Currency, and three

configuration constants.

#[pallet::config]

pub trait Config: frame_system::Config {

/// The ubiquious Event type

type Event: From<Event<Self>> + IsType<<Self as frame_system::Config>::Event>;

/// The currency in which the crowdfunds will be denominated

type Currency: ReservableCurrency<Self::AccountId>;

/// The amount to be held on deposit by the owner of a crowdfund

type SubmissionDeposit: Get<BalanceOf<Self>>;

/// The minimum amount that may be contributed into a crowdfund. Should almost certainly be at

/// least ExistentialDeposit.

type MinContribution: Get<BalanceOf<Self>>;

/// The period of time (in blocks) after an unsuccessful crowdfund ending during which

/// contributors are able to withdraw their funds. After this period, their funds are lost.

type RetirementPeriod: Get<Self::BlockNumber>;

}

Custom Types

Our pallet introduces a custom struct that is used to store the metadata about each fund.

#[derive(Encode, Decode, Default, PartialEq, Eq)]

#[cfg_attr(feature = "std", derive(Debug))]

pub struct FundInfo<AccountId, Balance, BlockNumber> {

/// The account that will receive the funds if the campaign is successful

pub beneficiary: AccountId,

/// The amount of deposit placed

pub deposit: Balance,

/// The total amount raised

pub raised: Balance,

/// Block number after which funding must have succeeded

pub end: BlockNumber,

/// Upper bound on `raised`

pub goal: Balance,

}

In addition to this FundInfo struct, we also introduce an index type to track the number of funds

that have ever been created and three convenience aliases.

pub type FundIndex = u32;

type AccountIdOf<T> = <T as frame_system::Config>::AccountId;

type BalanceOf<T> = <<T as Config>::Currency as Currency<AccountIdOf<T>>>::Balance;

type FundInfoOf<T> = FundInfo<AccountIdOf<T>, BalanceOf<T>, <T as frame_system::Config>::BlockNumber>;

Storage

The pallet has two storage items declared the usual way using decl_storage!. The first is the

index that tracks the number of funds, and the second is a mapping from index to FundInfo.

#[pallet::storage]

#[pallet::getter(fn funds)]

pub(super) type Funds<T: Config> = StorageMap<_, Blake2_128Concat, FundIndex, FundInfoOf<T>, OptionQuery>;

#[pallet::storage]

#[pallet::getter(fn fund_count)]

pub(super) type FundCount<T: Config> = StorageValue<_, FundIndex, ValueQuery>;

This pallet also stores the data about which users have contributed and how many funds they contributed in a child trie. This child trie is not explicitly declared anywhere.

The use of the child trie provides two advantages over using standard storage. First, it allows for removing the entirety of the trie is a single storage write when the fund is dispensed or dissolved. Second, it allows any contributor to prove that they contributed using a Merkle Proof.

Using the Child Trie API

The child API is abstracted into a few helper functions in the impl<T: Config> Module<T> block.

/// Record a contribution in the associated child trie.

pub fn contribution_put(index: FundIndex, who: &T::AccountId, balance: &BalanceOf<T>) {

let id = Self::id_from_index(index);

who.using_encoded(|b| child::put(&id, b, &balance));

}

/// Lookup a contribution in the associated child trie.

pub fn contribution_get(index: FundIndex, who: &T::AccountId) -> BalanceOf<T> {

let id = Self::id_from_index(index);

who.using_encoded(|b| child::get_or_default::<BalanceOf<T>>(&id, b))

}

/// Remove a contribution from an associated child trie.

pub fn contribution_kill(index: FundIndex, who: &T::AccountId) {

let id = Self::id_from_index(index);

who.using_encoded(|b| child::kill(&id, b));

}

/// Remove the entire record of contributions in the associated child trie in a single

/// storage write.

pub fn crowdfund_kill(index: FundIndex) {

let id = Self::id_from_index(index);

child::kill_storage(&id);

}

Because this pallet uses one trie for each active crowdfund, we need to generate a unique

ChildInfo for each of

them. To ensure that the ids are really unique, we incluce the FundIndex in the generation.

pub fn id_from_index(index: FundIndex) -> child::ChildInfo {

let mut buf = Vec::new();

buf.extend_from_slice(b"crowdfnd");

buf.extend_from_slice(&index.to_le_bytes()[..]);

child::ChildInfo::new_default(T::Hashing::hash(&buf[..]).as_ref())

}

Pallet Dispatchables

The dispatchable functions in this pallet follow a standard flow of verifying preconditions, raising appropriate errors, mutating storage, and finally emitting events. We will not present them all in this writeup, but as always, you're encouraged to experiment with the recipe.

We will look closely only at the dispense dispatchable which pays the funds to the beneficiary

after a successful crowdfund. This dispatchable, as well as dissolve, use an incentivization

scheme to encourage users of the chain to eliminate extra data as soon as possible.

Data from finished funds takes up space on chain, so it is best to settle the fund and cleanup the

data as soon as possible. To incentivize this behavior, the pallet awards the initial deposit to

whoever calls the dispense function. Users, in hopes of receiving this reward, will race to call

these cleanup methods before each other.

/// Dispense a payment to the beneficiary of a successful crowdfund.

/// The beneficiary receives the contributed funds and the caller receives

/// the deposit as a reward to incentivize clearing settled crowdfunds out of storage.

#[pallet::weight(10_000)]

pub fn dispense(origin: OriginFor<T>, index: FundIndex) -> DispatchResultWithPostInfo {

let caller = ensure_signed(origin)?;

let fund = Self::funds(index).ok_or(Error::<T>::InvalidIndex)?;

// Check that enough time has passed to remove from storage

let now = <frame_system::Module<T>>::block_number();

ensure!(now >= fund.end, Error::<T>::FundStillActive);

// Check that the fund was actually successful

ensure!(fund.raised >= fund.goal, Error::<T>::UnsuccessfulFund);

let account = Self::fund_account_id(index);

// Beneficiary collects the contributed funds

let _ = T::Currency::resolve_creating(

&fund.beneficiary,

T::Currency::withdraw(

&account,

fund.raised,

WithdrawReasons::TRANSFER,

ExistenceRequirement::AllowDeath,

)?,

);

// Caller collects the deposit

let _ = T::Currency::resolve_creating(

&caller,

T::Currency::withdraw(

&account,

fund.deposit,

WithdrawReasons::TRANSFER,

ExistenceRequirement::AllowDeath,

)?,

);

// Remove the fund info from storage

<Funds<T>>::remove(index);

// Remove all the contributor info from storage in a single write.

// This is possible thanks to the use of a child tree.

Self::crowdfund_kill(index);

Self::deposit_event(Event::Dispensed(index, now, caller));

Ok(().into())

}

This pallet also uses the Currency

Imbalance trait as discussed in

the Charity recipe, to make transfers without incurring transfer fees to the

crowdfund pallet itself.

Instantiable Pallets

Instantiable pallets enable multiple instances of the same pallet logic within a single runtime. Each instance of the pallet has its own independent storage, and extrinsics must specify which instance of the pallet they are intended for. These patterns are illustrated in the kitchen in the last-caller and default-instance pallets.

Some use cases:

- Token chain hosts two independent cryptocurrencies.

- Marketplace track users' reputations as buyers separately from their reputations as sellers.

- Governance has two (or more) houses which act similarly internally.

Substrate's own Balances and Collective pallets are good examples of real-world code using this technique. The default Substrate node has two instances of the Collectives pallet that make up its Council and Technical Committee. Each collective has its own storage, events, and configuration.

Council: collective::<Instance1>::{Module, Call, Storage, Origin<T>, Event<T>, Config<T>},

TechnicalCommittee: collective::<Instance2>::{Module, Call, Storage, Origin<T>, Event<T>, Config<T>}

Writing an Instantiable Pallet

Writing an instantiable pallet is almost entirely the same process as writing a plain non-instantiable pallet. There are just a few places where the syntax differs.

You must call

decl_storage!Instantiable pallets must call the

decl_storage!macro so that theInstancetype is created.

Configuration Trait

pub trait Config<I: Instance>: frame_system::Config {

/// The overarching event type.

type Event: From<Event<Self, I>> + Into<<Self as frame_system::Config>::Event>;

}

Storage Declaration

decl_storage! {

trait Store for Module<T: Config<I>, I: Instance> as TemplatePallet {

...

}

}

Declaring the Module Struct

decl_module! {

/// The module declaration.

pub struct Module<T: Config<I>, I: Instance> for enum Call where origin: T::Origin {

...

}

}

Accessing Storage

<Something<T, I>>::put(something);

If the storage item does not use any types specified in the configuration trait, the T is omitted, as always.

<Something<I>>::put(something);

Event initialization

fn deposit_event() = default;

Event Declaration

decl_event!(

pub enum Event<T, I> where AccountId = <T as frame_system::Config>::AccountId {

...

}

}

Installing a Pallet Instance in a Runtime

The syntax for including an instance of an instantiable pallet in a runtime is slightly different than for a regular pallet. The only exception is for pallets that use the Default Instance feature described below.

Implementing Configuration Traits

Each instance needs to be configured separately. Configuration consists of implementing the specific

instance's trait. The following snippet shows a configuration for Instance1.

impl template::Config<template::Instance1> for Runtime {

type Event = Event;

}

Using the construct_runtime! Macro

The final step of installing the pallet instance in your runtime is updating the

construct_runtime! macro. You may give each instance a meaningful name. Here I've called

Instance1 FirstTemplate.

FirstTemplate: template::<Instance1>::{Module, Call, Storage, Event<T>, Config},

Default Instance

One drawback of instantiable pallets, as we've presented them so far, is that they require the runtime designer to use the more elaborate syntax even if they only desire a single instance of the pallet. To alleviate this inconvenience, Substrate provides a feature known as DefaultInstance. This allows runtime developers to deploy an instantiable pallet exactly as they would if it were not instantiable provided they only use a single instance.

To make your instantiable pallet support DefaultInstance, you must specify it in four places.

pub trait Config<I=DefaultInstance>: frame_system::Config {

decl_storage! {

trait Store for Module<T: Config<I>, I: Instance=DefaultInstance> as TemplateModule {

...

}

}

decl_module! {

pub struct Module<T: Config<I>, I: Instance = DefaultInstance> for enum Call where origin: T::Origin {

...

}

}

decl_event!(

pub enum Event<T, I=DefaultInstance> where ... {

...

}

}

Having made these changes, a developer who uses your pallet doesn't need to know or care that your pallet is instantable. They can deploy it just as they would any other pallet.

Genesis Configuration

Some pallets require a genesis configuration to be specified. Let's look to the default Substrate node's use of the Collective pallet as an example.

In its chain_spec.rs file we see

GenesisConfig {

...

collective_Instance1: Some(CouncilConfig {

members: vec![],

phantom: Default::default(),

}),

collective_Instance2: Some(TechnicalCommitteeConfig {

members: vec![],

phantom: Default::default(),

}),

...

}

Computational Resources and Weights

Any computational resources used by a transaction must be accounted for so that appropriate fees can be applied, and it is a pallet author's job to ensure that this accounting happens. Substrate provides a mechanism known as transaction weighting to quantify the resources consumed while executing a transaction.

Indeed, mispriced EVM operations have shown how operations that underestimate cost can provide economic DOS attack vectors: Onwards; Underpriced EVM Operations

Assigning Transaction Weights

Pallet authors can annotate their dispatchable function with a weight using syntax like this,

#[weight = <Some Weighting Instance>]

fn some_call(...) -> Result {

// --snip--

}

For simple transactions a fixed weight will do. Substrate allows simply specifying a constant integer in cases situations like this.

decl_module! {

pub struct Module<T: Config> for enum Call {

#[weight = 10_000]

fn store_value(_origin, entry: u32) -> DispatchResult {

StoredValue::put(entry);

Ok(())

}

For more complex transactions, custom weight calculations can be performed that consider the